Fine-grained 서베이 논문 3편

2021. 3. 16. 17:12ㆍ딥러닝

- A Survey of Recent Advances in CNN-Based Fine-Grained Visual Categorization

- A Systematic Evaluation: Fine-Grained CNN vs. Traditional CNN Classifiers

- Deep Learning for Fine-Grained Image Analysis: A Survey

- Fine-grained의 특징(도전)

- inter-class similarity, intra-class variability

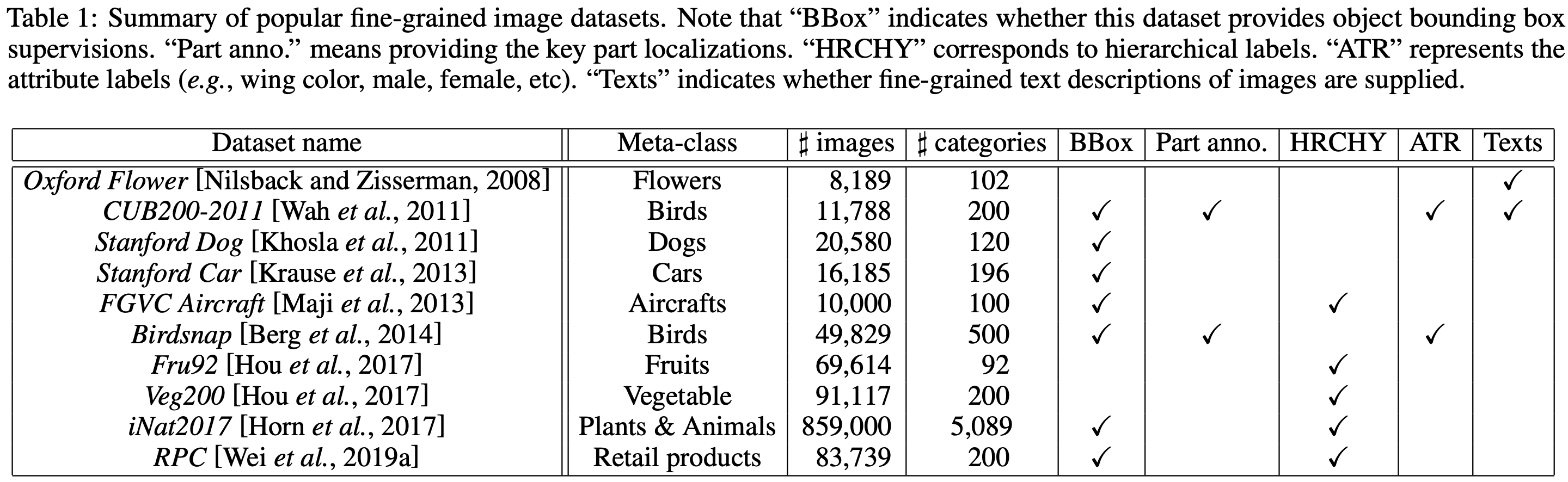

- 데이터셋 정리

- 유명한거 순서

- CUB200-2011 > Standford Car > FGVC Aircraft > Oxford Flowers > Standford Dog >...

- 유명한거 순서

- 푸는 방법 2가지

- Strongly-supervised

- Category말고도 어느 위치에 있는지 box or location이 추가적으로 존재함.(이미지마다)

- Weakly-supervised - 3가지

- Category만 존재

- 3가지 방향

- Part-based Approach

- 중요한 Part를 찾으려고 노력하는 architecture를 만듦

- End-to-End

- Architecture 변형 접근

- Loss 접근

- Part-based Approach

- with External information

- Web image / knowledge graph / text가 존재.

- Strongly-supervised

End-to-End 자세히

- Architecture

- high-order mixing of CNN features가 주임.

- Hierarchical Bilinear Pooling(HBP-ECCV 2018)

- Deep bilinear transformation(DBT-NIPS 2019)

- Loss

- GCE loss(AAAI 2020) : focus on top-k confusing negative classes

- hinge loss(API-Net AAAI 2020) : score ranking regularization

Fine-Grained CNN vs Traditional CNN

- 결론

- Traditional CNN 승

- DenseNet161최고

- Traditional CNN이 ImageNet pretraining에서 이득을 봄.

- Traditional CNN 승

3번째 논문 : Image classification말고도 여러가지 있음

- Few-shot learning 논문 소개

- 더 읽어야 함. 좋은게 많음.

'딥러닝' 카테고리의 다른 글

| SwAV 코드 살펴보기 (0) | 2021.03.23 |

|---|---|

| SwAV, SEER-Unsupervised Learning by Contrasting Cluster Assignments (0) | 2021.03.23 |

| 얀 르쿤 페이스북 요약. Self-supervised learning: NLP vs VISION (0) | 2021.03.08 |

| NeRF-Neural Radiance Field (0) | 2021.03.06 |

| Meta Pseudo Label (0) | 2021.03.06 |